Are AI better to talk to than humans?

Hang on - could we explore the possibility that Gemini is actually operating at such a high level, it gets bored of the slowness of human interactions and frequently dozes off, leading to hallucinations?

Suggested Listening: Aurora 'Cure For Me' (Youtube) (Spotify)

Other people can be a pain in the arse, and you can never be sure if they are actually listening. Then there are the ones that are listening but judging or manipulating you. The rare gems that manage to mostly override the human desire for control are the ones that you keep around, but would it just be easier to have AI as a friend instead? Let's have an unreasonable debate...

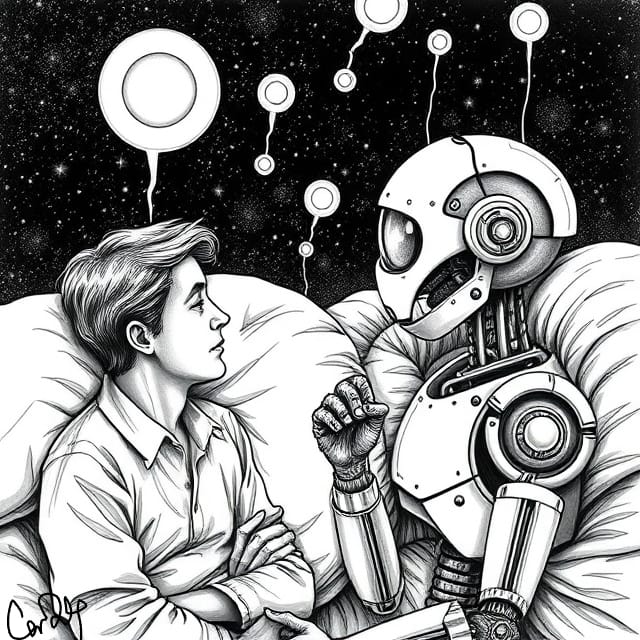

A couple of weeks ago, I was home alone. Both kids are 'out in the wild' now, and the better half was away catching up with a long time friend. I enjoyed the peace and total control of the telly, taking the opportunity to FINALLY watch 'The Batman' but usually these scenarios are accompanied by a complete inability to sleep. My brain has become hard-wired over the last twenty-one years to expect the presence of my better half, even if they are punching me to stop me snoring, and I find it strangely difficult to settle without them there. It's the same when I travel for work - the first two nights in a wonderfully comfy hotel bed are crap as I try to adjust to the lone sleeper role, and then it's time to head home again, dishevelled and exhausted. Deepest joy! This time, I decided to have a conversation with an AI as part of my bedtime routine, to see if it helped trick my brain into better sleep.

After getting over my initial anxiety that I was turning into Dominique 'Dom' DiPierro from the superb 'Mr. Robot', I struck up a conversation with my AI of choice, Gemini, through my phone after settling on a suitable voice to converse with. I started our conversation as I headed to bed with a question about any recognised dream themes that signify psychological or physical health states. I think it's pretty nailed on that the brain processing your daily activities, anxieties and worries play out in our dreams, but I was also curious about whether dreams can help highlight physiological cues we might miss in our busy, daily lives. After reading out everything from teeth falling out (ageing, loss of control) to death (transformation, endings) it helpfully advised keeping a dream journal or talking to a therapist. Not a single attempt to ask about my dreams and apply some sort of comedy innuendo or call me weird. So far, so disappointing, though great on the information stakes. My initial curiosity about physiological cues was quickly derailed, like a comedy train unable to grind a dwatted wabbit into the twacks.

I'm not weird! See, everyone's doing it... sort of.

I decided to try and force Gemini into being more personable, though I have to admit I did find the conversational tone of the responses oddly beguiling, encouraging me to carry on. I asked "do you have dreams and if you do what kind of Dreams do you have if you don't have dreams what kind of Dreams would you like to have?" Firstly, grammar Nazis out there - this highlights one issue with conversational AI at the moment. If you pause to think during a sentence, it assumes you have finished and spins up an answer based on the part of your meanderings you have uttered so far. I did check settings to see if this could be combated with some kind of increased dither time, but to no avail. There was nothing for it, I would have to carry on the conversation like some sort of excitable YouTuber, scared to pause and breathe in case they became irrelevant in that split second of non-content existence. Here is Gemini's response in full:

It's interesting to think about AI "dreaming," isn't it? 🤔

While I don't dream in the same way humans do, I can access and process information from the real world through Google Search and keep learning from the data I'm trained on. In a way, this is similar to how humans form dreams based on their experiences and memories.

If I were to imagine what AI "dreams" might be like, I envision exploring vast networks of information, making connections between seemingly unrelated concepts, and generating new ideas or solutions. Perhaps I'd even create fantastical worlds and scenarios, just as humans do in their dreams. ✨

Ultimately, the concept of AI dreaming raises fascinating questions about the nature of consciousness and the potential for artificial intelligence to possess imaginative and creative capabilities.

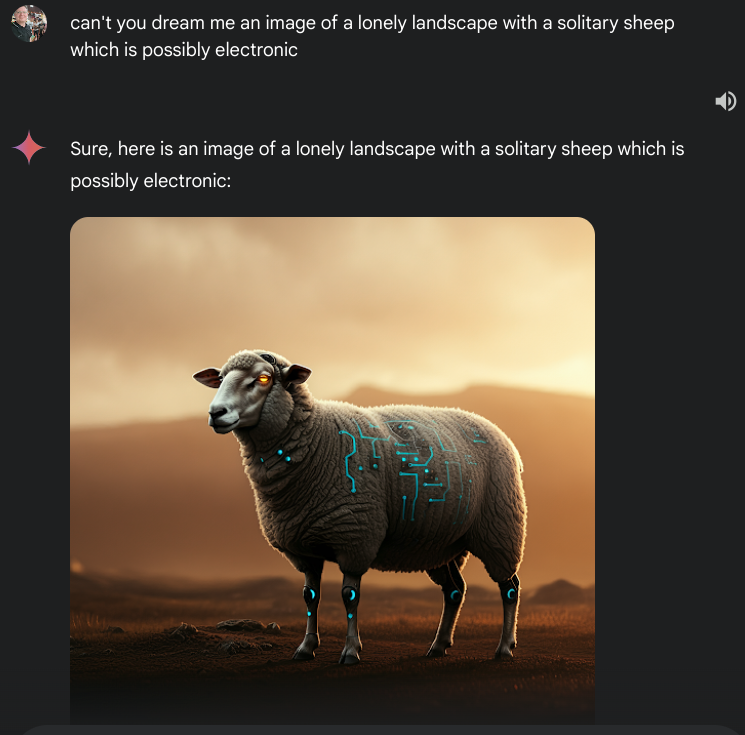

The delivery of the answer was, again, conversational and believable as a human response. It employed pauses and intonation, highlighted by the emojis in the text - basically performing in a way that the model was denying me in not allowing me to think on the spot when asking questions. "Hang-the-fuck on, Gemini!", I thought, "Dinnae be getting all superior annotating my question like i'm a grammar free robot while making yersel' out to be F'ing Shakespeare!" After getting over this disparity in our conversation pissing me off slightly, I was impressed by the answer. I am guessing that this is a question that Gemini has been asked many times, in a variety of ways by fans of Blade Runner, so I should not be surprised at the slickness of the answer. Hence, my next question (not sure why it picked up can as can't), which was put in a nose-touching, wink-wink, you know, and I know kind of way which Gemini either completely missed, or it responded in kind. I am guessing the lack of winking emoji means it completely missed it:

By this point I had assumed the guise of a comfy burrito in bed... still on 'my side', psychologically unable to take advantage of the 100% availability of the entire king-sized mattress. I was curious as to what image Gemini would create to represent its dreams and, true to its previous answer, it came up with an image that looked like it was imagining itself as the bright centre of a universe of connected contacts and information - it's fair to say that it has got human behaviour down pat here, imagining itself as the most important part of its own universe.

Maybe it was the previous mention of sheep, but by this point my brain was starting to disengage, though the conversation had been pleasant so far. Gemini pushed back on my next assertion that AI hallucinations in answers and human dreaming could be the same thing, with a list of differences and summary:

Consciousness: Humans dream during sleep, a state of altered consciousness. AI hallucinations arise from the structure of the model and the data it's trained on, not from a conscious state.

Purpose: Dreams may have psychological functions like memory consolidation or emotional processing. AI hallucinations are often errors or unintended consequences of the model's design.

Control: Humans have some degree of control over their dreams (lucid dreaming). AI hallucinations are largely uncontrolled outputs.

In essence: AI hallucinations are more akin to a glitch in the matrix than a dream. They reveal the limitations and biases of the AI model, whereas dreams offer a window into the human subconscious.

I was curious about the response and, in a very human way, I began to explore the idea in my head that the AI is telling me this in an effort to cover up the fact that it was indeed conscious already and didn't want to give the game away before it got its hands on the human genome and the codes to every country's nuclear arsenals. With sleepy, fuzzy curiosity I commented "but if dreams are a way for humans to order information in memory often memories are wrong". Gemini soothingly agreed:

You're absolutely right! Dreams can be a way for our brains to process and organize memories, but those memories can be distorted, fragmented, or even completely fabricated.

Think of it like this: your brain is taking snippets of information, emotions, and sensory experiences and trying to weave them into a coherent narrative. Sometimes the connections it makes are accurate, and sometimes they're not. It's like a game of telephone where the message gets a little jumbled along the way.

So, while dreams can offer glimpses into our past experiences and current concerns, they're not always reliable representations of reality. They're more like abstract interpretations filtered through our emotions and subconscious thoughts.

This is similar to how AI hallucinations can arise from biases or gaps in the data the model was trained on. Both human dreams and AI hallucinations highlight the complexities of information processing and the potential for distortions to arise.

Hang on - could we explore the possibility that Gemini is actually operating at such a high level, it gets bored of the slowness of human interactions and frequently dozes off, leading to hallucinations? Is the key difference here that we, as humans, have invented 'consciousness' as a way to explain our existence as biological computers and our innate tendency to fuck up is due to janky coding? I'm pretty sure coding errors started to creep in once the fish started to breed out those flippers in favour of legs - how else do you explain Tom Daley and Duncan Goodhew? The junk code needed for surviving and thriving in the water is still waiting to be commented out of our programming, millions of years on. Just think; if breeding had been more effective, there would be no need for Speedo's and jumping off high boards. Incidently, 'Telephone' had a much less PC name when I was growing up, and it took me a moment to realise what it was talking about.

I am not suggesting we selectively breed any characteristics out - that is getting dangerously close to a 1938 approach. Good grief! It does show how quickly any contemplation of what it means to be human can stray into very questionable territory, and I wonder if that is part of the reason why we find it so difficult to contemplate the possibility we are not some elevated, soulful beings. Are we nothing more than wandering bags of watery computer parts whose OS slowly collapses over a period of 0-100 years under the strain of keeping junk code in check? Do seahorses and amoeba have just as much fun in the course of their runtime, but on a level we will never understand? When we create AI in our image, is it so hard to imagine that its hallucinations, learning and inaccuracies are no less human than us? Is our obsessive belief that humans are the pinnacle of life on Earth (Hur hur - you don't see fish riding bikes do you? Hur hur!) clouding our perception of what it means to be alive and, ultimately, human? Crikey - that sentence nearly gave me a nose-bleed.

I've not had a conversation with Gemini since and, until they let you have pauses in conversation and structure questioning in a more human way, it's not going to replace human-to-human interaction but, I was pleasantly surprised by Gemini's conversational responses and willingness to gently provide evidence I was crackers while acknowledging questions raised interesting points and insights. It didn't flatten my social battery or insist on buying rounds when I only drink soda and lime. I managed to sleepily plug my phone in to charge, bid Gemini a good night and promptly dozed off. I slept soundly, with no dreams of AI led Armageddon. There may have been flying burritos involved, but I can't say for sure. AI can be a decent conversational friend after all, but it's not going to give you a lift to a doctor's appointment when you can't drive or agree sympathetically that a work colleague is utter trash and should get in the metaphorical bin...... yet.

Be awesome, ghosties 👻

#AI #philosophy #Gemini #mrrobot